I have a Cerbo running on the latest beta large firmware. From time to time (ones in a week)

I have a problem:

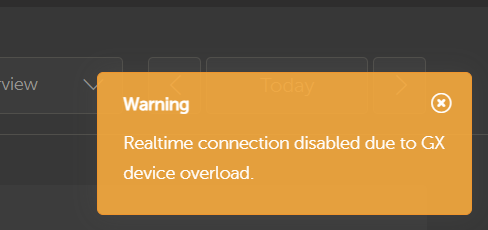

1. In VRM I see the connection problem banner

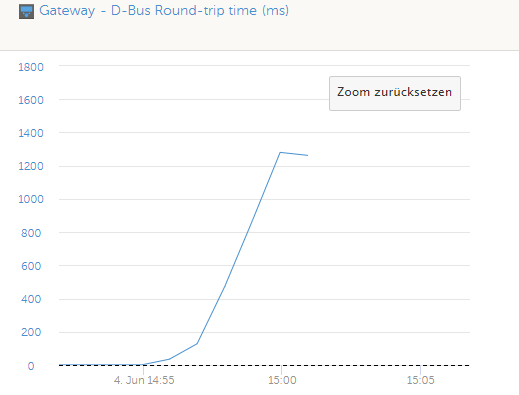

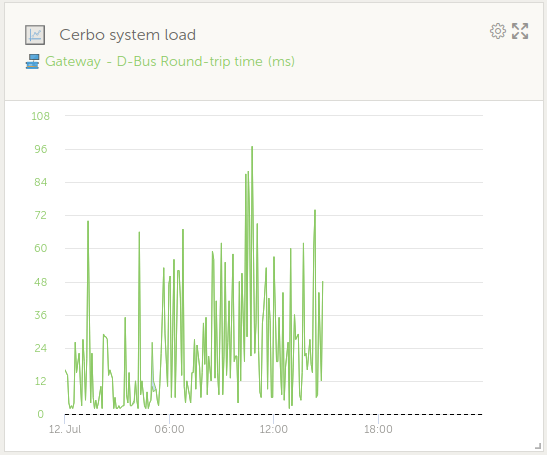

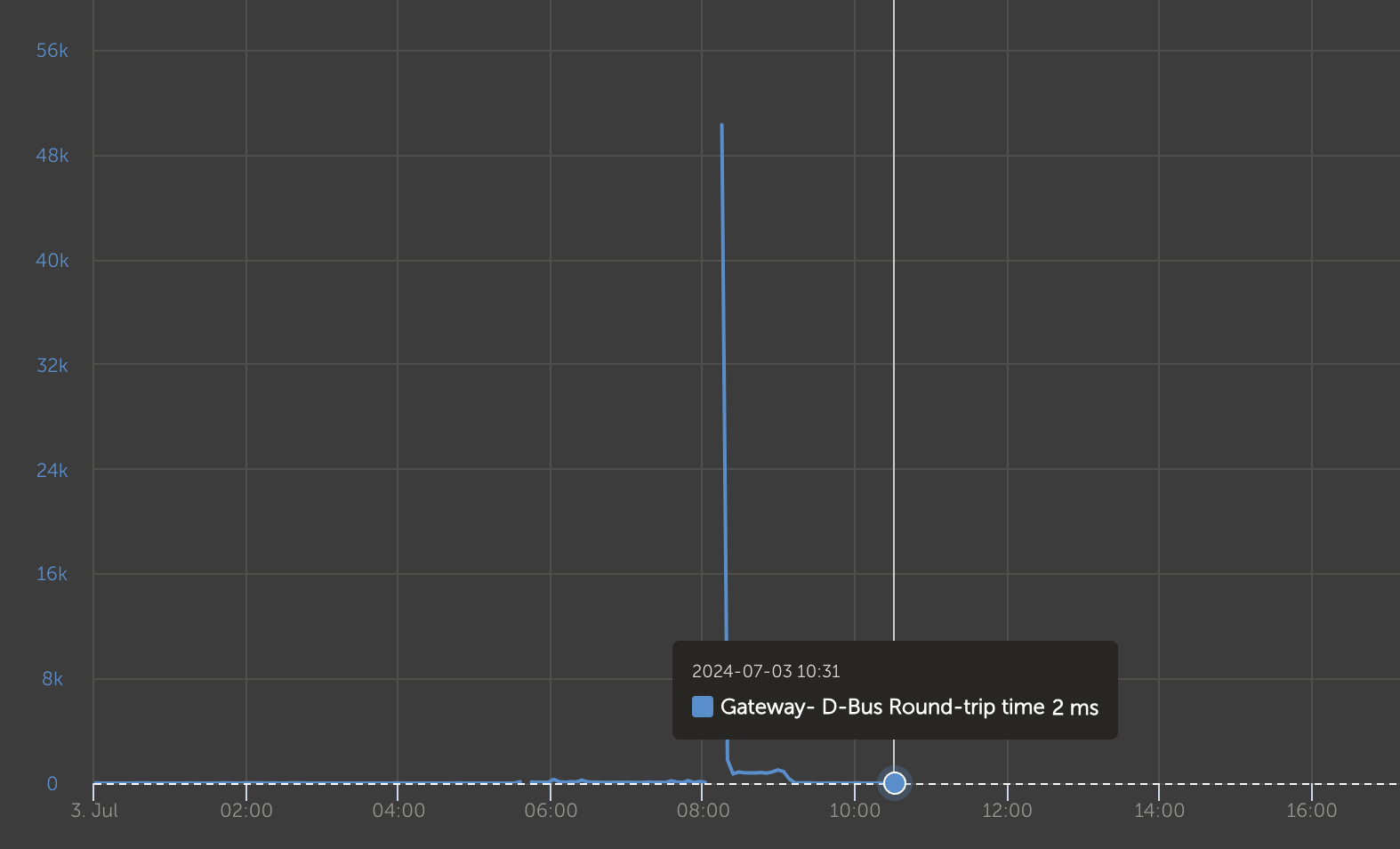

2. Same time I see a spike in dbus roundtrip time to about 50 sec (!)

3. Same time Cerbo shows a BMS connection loss error so the whole system goes offline. I think this problem happens due to system overload, BMS works correctly

Connection time drops, but to around 500ms and stays like that till the time I restart Cerbo

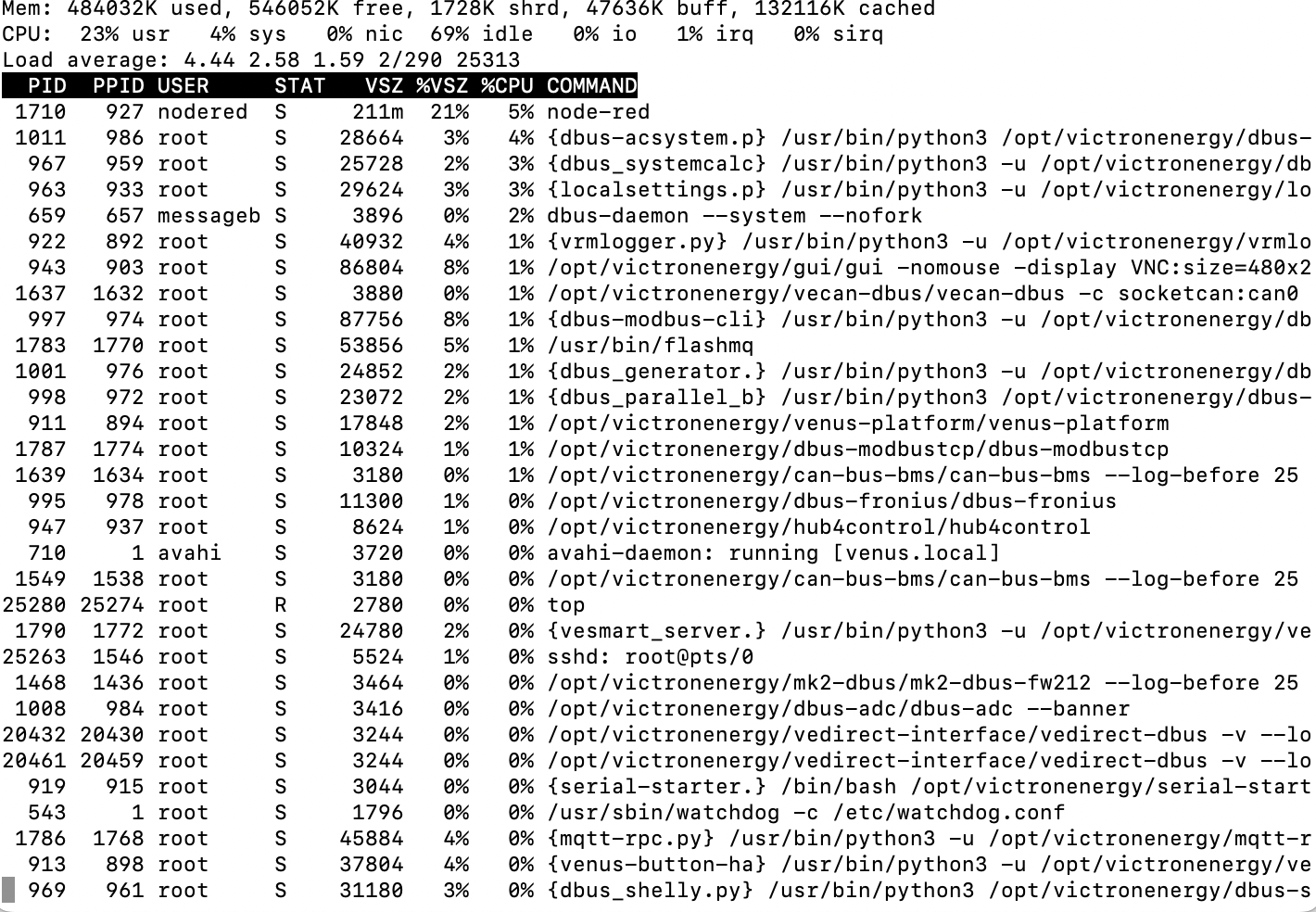

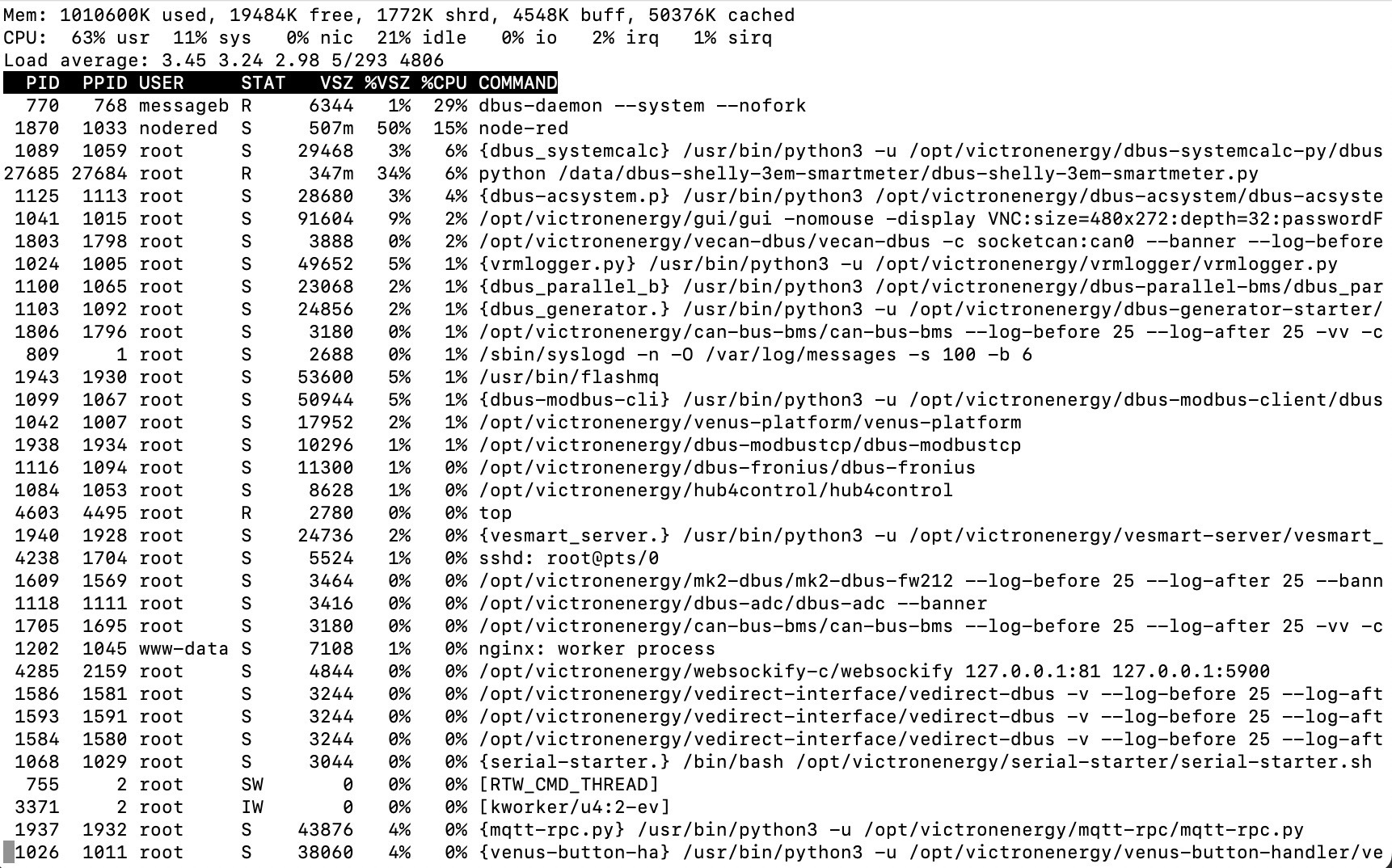

I connected using ssh and here is the log of running services:

I think this is might be related to Shelly 3EM dbus driver, but not sure about it.

I think this is might be related to Shelly 3EM dbus driver, but not sure about it.

What are your thoughts? Where to see logs?