System setup: Cerbo GX MK1 with small touchscreen, wired ethernet connection

Power supply from 48V bus

Pylontech battery, 5x MPPT 150/35, 3~ Multiplus 2 5000, Lynx Shunt,

2x Shelly 3 EM (grid and AC Solar)

Operating system: Current Venus OS Large, ESS active (region: Germany), VRM active

Node-RED active with some flows to evalulate current production and consumtion and alter the grid setpoint in order to optimize daily production.

The old 9,5kWp AC-coupled solar generator may feed in and gets paid for it, the new 5,7kWp DC-coupled system is not allowed to feed in.

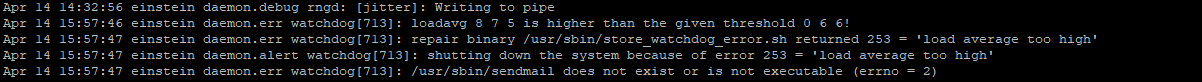

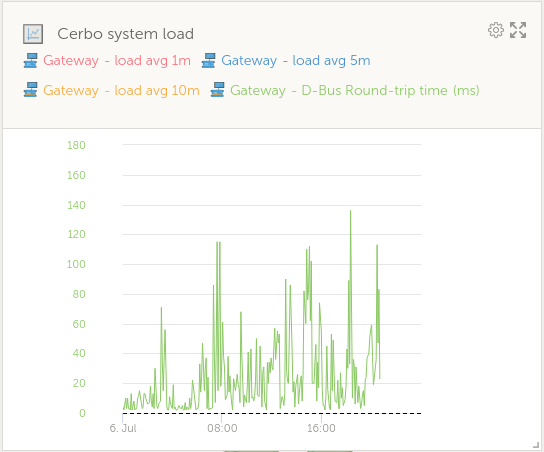

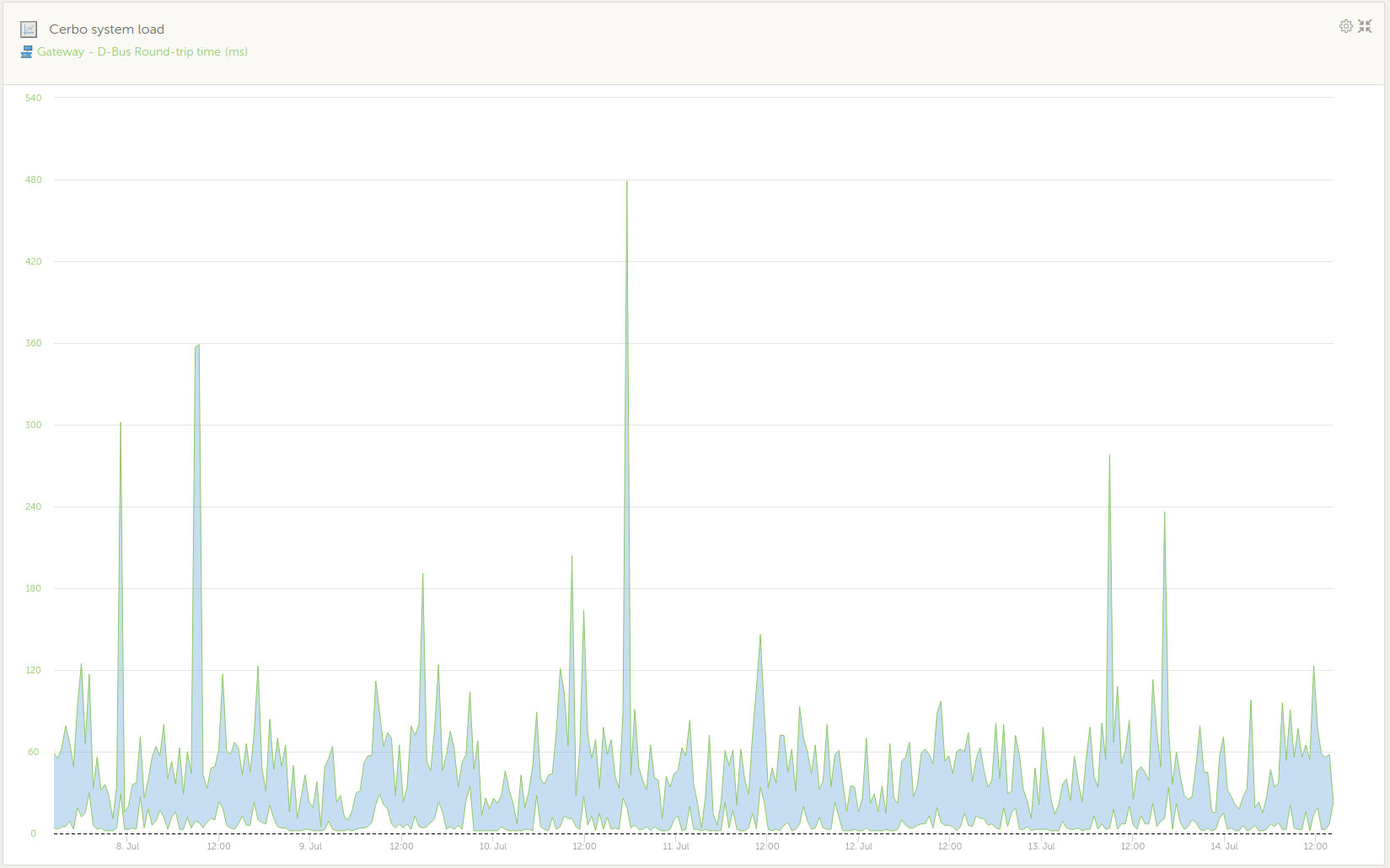

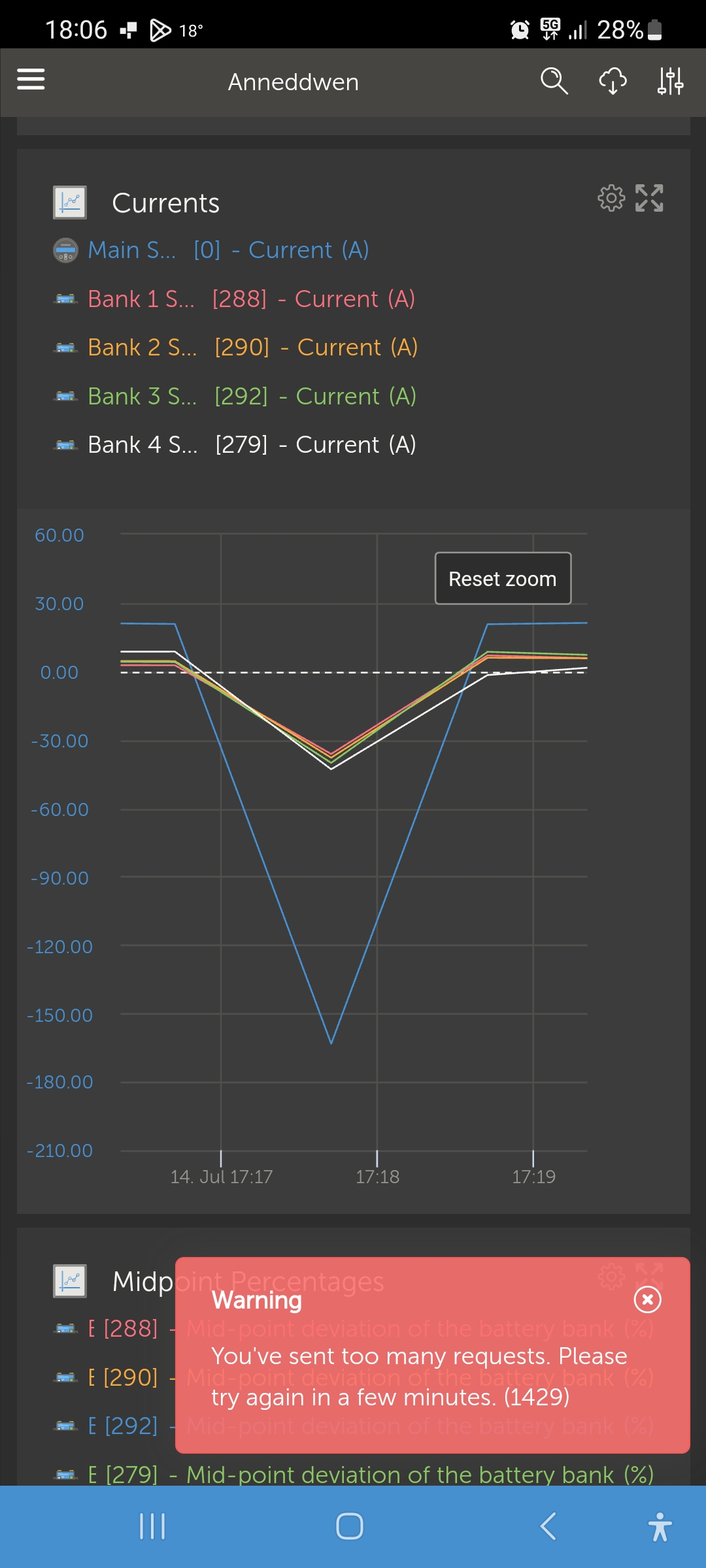

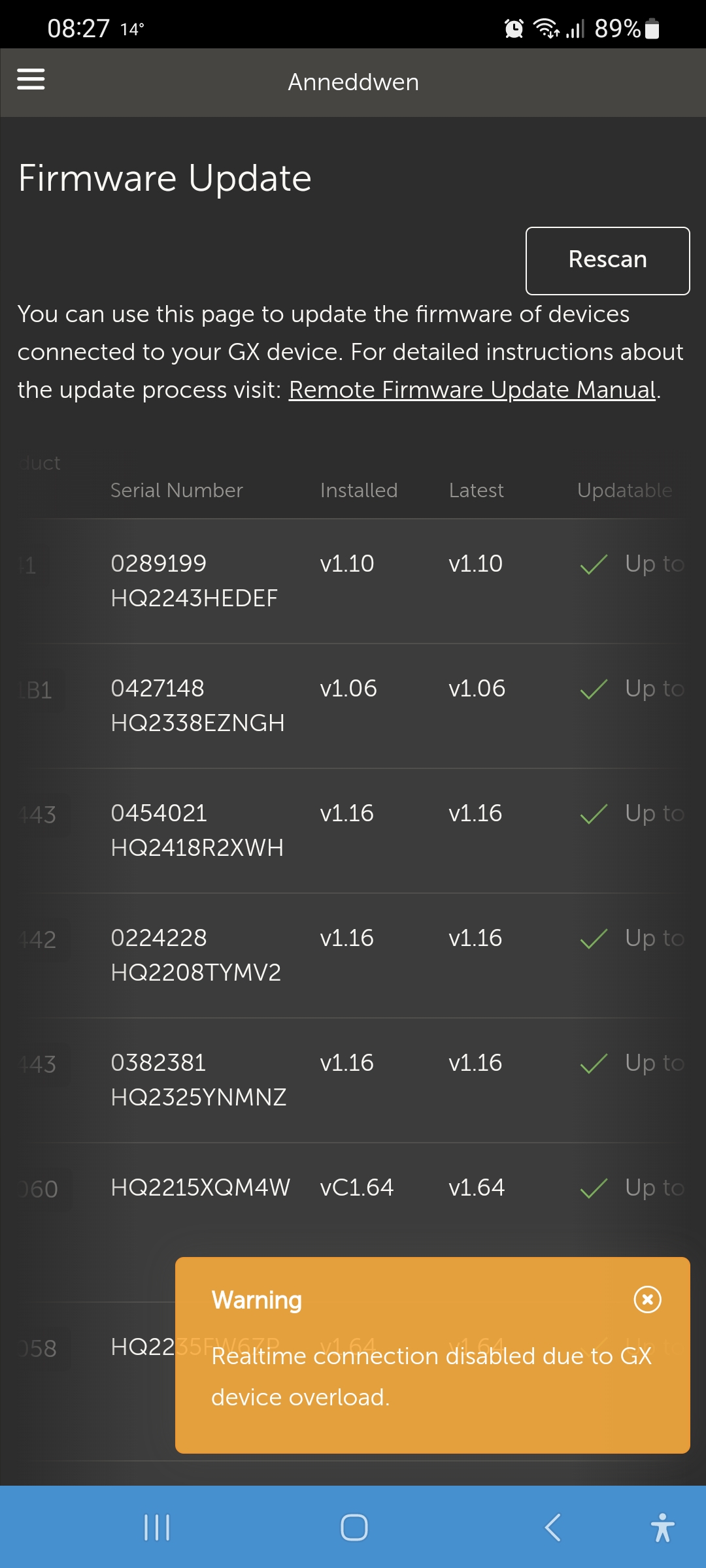

Symptoms: The system randomly reboots, sometimes leaving the grid setpoint at a large negative value.

dmesg -H only shows entries since reboot.

Reboot on connection loss in the VRM settings is off.

Sometimes, the reboot doesn't complete, the screen shows a small white square on black background located in the middle and remains unresponsive. The Node-RED flow and the VRM connection are active, but neither local nor remote console work. Power cycling the Cerbo resolves the problem, but sometimes needs two attempts.

Is there a log file somewhere that could give a hint at what might be going on?

Any ideas?