Hi,

I am new to Victron, having only recently acquired a small system ( Solar controller, BMV-712 and Volt/Temp sensor ).

My question relates to the Battery Monitor - BMV-712 Smart - and its Charge Efficiency Factor ( CEF ) setting.

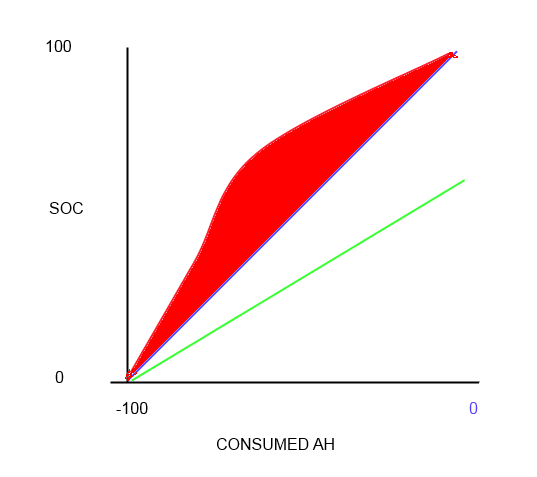

I recently conducted the following test. I set the Battery Amp Hours to 100Ah ( it makes the maths easier ), and the CEF to 100%, and then changed the SOC to a level less than 100% - as it would be if I had used some battery power. I then monitored the SOC rate of increase as current went through the shunt to recharge the battery.

The results were what I expected. Every 1Ah of charge that went into my battery ( according to the BMV ) caused a 1% increase in SOC. That is what I would understand as 100% efficient.

I then changed the CEF to 50% and set the SOC to a level less that 100%, as above, then monitored the SOC rate of increase as the current went through the shunt. The results were NOT what I expected.

Instead, for every 1Ah that went in to the battery ( according to the BMV ), the SOC went up by 2%. This what I would understand as 200% efficiency - not 50% efficiency.

For 50% efficiency I would expect that for every 1Ah that I put into the battery the SOC would go up by 0.5% - because of heat losses, battery chemistry or other factors.

I am perplexed, because nothing is 100% efficient when it comes to charging batteries, or converting sunlight to power, so why would there be any reason to move the SOC towards 100% at 2X the rate that the amp hours are being put into the battery.

Am I perhaps not understanding the true meaning of % Efficiency as it is defined in the Northern Hemisphere ? Or is my BMV-712 getting confused because it is located South of the Equator, and is therefore hanging upside down ? ( But it could not be that, because we all know that the earth is flat ! :-) )

I would appreciate any input, opinion or words to set me straight.

TIA